An Introduction to NLP: Advice from an Advanced Developer on Building a Dialog System

I first became acquainted with NLP while studying at university, but I never saw myself pursuing a career in that direction. However, following my studies, fate threw me my first job where NLP was a key part of the role.

There I collected, processed, and annotated large blocks of text data from various pages on the Internet. As an avid programmer, the practice was plentiful but the theory was lacking. Later I discovered Coursera and many wonderful teachers there.

Over the past six years, I’ve gained valuable experience developing dialog systems and believe the insights I can share will be useful to both newbies to the field and experienced NLP professionals alike.

ChatScript in NLP: Never Again

I’ll begin with an epic story of failure. At the start of my career, I came across a project where the NLP component was the basis of the product (this is a rarity). The customer had chosen a ChatScript platform for its dialog system. At the time, ChatScript had a reputation for being at the forefront of NLP and was seen as an attractive investment, particularly since it had repeatedly won the Loebner Prize (awarded to the most “humanoid” programs).

In my experience, there’s no “magic under the hood” for Chatscript. It contains only an advanced set of ifs and regular expressions. Given that ChatScript does not have powerful tools for building chatbots, it seems to me that the only person who will win the annual “lobbying” with ChatScript is the creator of the platform.

Architecture Creation: The Logic & Main Working Principle

As an NLP specialist, once you break away from outdated technologies like ChatScript, you’ll be able to choose the best architectural solution for the dialog system you’re building. There are a bunch of good analogs on the market for comparison – Siri, Google Assistant, Microsoft Cortana, and Amazon Alexa for example. Their power is neatly wrapped in simplicity. They all allow you to send messages, make calls, find a location, and more in an easy, straightforward way.

The first thing a programmer should pay attention to is the architectural similarity of all the above dialog systems where the request is processed through a pipeline of modules. The key goal, which is recognizing the intention (i.e. the intent of the user), is reproduced using a standard ML-task multiclass classification. Accurate analysis of any data is impossible without proper sorting and labeling of information. However, it is more difficult to organize this data when the element can belong to several markings at once. Let’s imagine that you categorize the library of Ukrainian literature and Zhadan’s collection falls under several labels including “poetry,” “prose,” and “suchukrlit.” However, there should be no such uncertainty in text that declares an individual’s wishes, so text labeling is often mutually exclusive. You ask for poetry, or prose, or a collection, but not all at once.

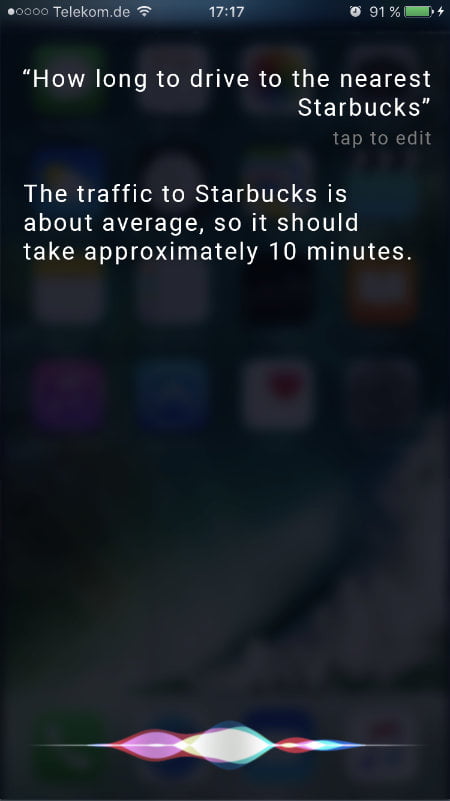

The principle of multiclass classification is simple – it collects the data body and marks them with the appropriate human intentions. A trained ML model learns to understand what we want from it. A typical example is Apple’s Siri. At the input, the system receives a text sequence and based on the “muscles” of the trained model, determines the user’s intention and gives the result. In the image below you can see how the system recognizes a person’s intention and on the backend, it outlines it as intent – nav.time.closest.

On the ground that human speech and language are almost always the difference between what is said and heard, the processing of natural language is more difficult than finding true/false matches. The answer to the phrase “Where is Kryivka?” will depend on (1) your location, (2) the desired mode of transport, (3) the hours of operation of the institution, and (4) road traffic. That is why intent is a form consisting of several slots. This form must be completed in order for an action to be performed.

For example, consider a tourist exploring the city who forgot that the subway is no longer working. When he asks how to get from his current location to the hotel, we reproduce his intention as nav.directions, which consists of two parts. Under the hood of the dialog system, it looks like this:

User: “Give me directions to the Hotel Grand Budapest.”

- Intent classifier: nav.directions

- Slot tagger: @to{HotelGrandBudapest}

- Dialogue manager: all slots are filled, here’s the route

Assistant: “Here’s the route.”

Of course, slot recognition is also a difficult task for which you need to train a separate model. Regardless of the degree of complexity, I recommend using the standard of BIO/IOB (short for “beginning-inside-outside”). This is a common format for tagging text marking in NLP. The text is broken down into tokens and only those that are of interest to a specific user with a specific intention (and the processor that is working on it) are marked. The prefix “B” is responsible for the beginning of the slot, “I” is the middle, and “O” is the slot itself. At the output, we get tokens marked with tags. Their model will be used as a label during training.

| Token | Find | me | way | to | Museum | ATO | in | Dnipro |

| Tag | O | O | O | O | B-TO | I-TO | B-LOC |

Working with Architects: The Сascade of Models

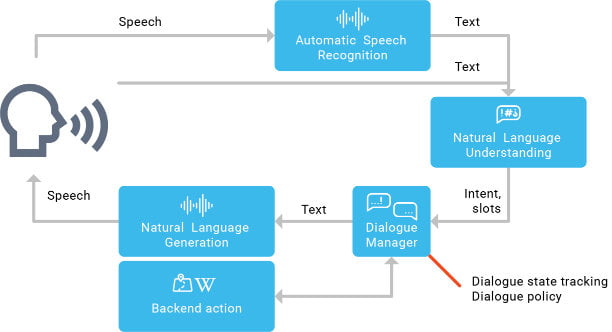

Your next steps will be the development and configuration of individual modules. The image below shows the entire pipeline of the dialog manager. Understanding how to work with each module is the key to an effective NLP engineer. As you can see, the scheme itself is simple: the ASR module converts human speech into text, after which the NLP algorithm structures and marks it, and finally the dialog manager interprets it into commands and directs the entire system. At the output, the user receives the expected result accompanied by generated text, audio message, link, or image.

I’ll break each module down into a bit more detail:

Automatic Speech Recognition reads the user’s oral language and converts it into text format for further processing. It is important to remember that the system needs to extract as many features as possible from the received text, especially for the correct operation of the next module.

Natural Language Understanding trains the obtained models and on this basis, creates an additional layer of logic that controls the work of the entire architecture and becomes the foundation of the Dialogue Manager module.

The Dialogue Manager is the locomotive of the dialog system that decides whether to execute or ignore a user’s request (note the previous illustration). It is closely related to backend processes that can access third-party services or databases. For example, when asked about Lviv City Hall, the backend will pull information from Wikipedia and Google Maps in parallel to provide two relevant answers.

Natural Language Generation is the last bastion in the machine’s attempt to understand the human intention and produce the end result. Having collected the necessary information from all previous modules, it generates a response-relevant response in human language.

In Summary

Although the path from “hey, Siri” to the desired result is confusing, it’s not as complicated as it may seem. The architecture of the dialog solution consists of a clear sequence of independent modules, each of which overcomes a specific step in word processing – reading, marking, interpretation, and so on. The success of the whole system depends not only on the training of the model but also on the clarity of each module.

Future and current NLP engineers will benefit from several other resources, many of which taught me how to build individual modules and combine them into the architecture of the dialog system better and faster. They include:

- Natural Language Processing with Python: The authors talk in simple words about NLTK for Python, building a dictionary, and parsing texts.

- Coursera: I often recommend this popular tutorial to fans of online learning.

- Speech & Language Processing course: This course at Stanford has much useful documentation and information.

Thank you for your time and attention. I hope you enjoyed reading about my experience in NLP!